Concepts

Concepts explained on this page serve as a base that allows us to get the most of the tool. We focus primarily on CI/CD Resources and CI/CD Pipelines.

-

- CI/CD Pipelines

- Pipeline (Definition)

- Pipeline Execution

- Potential Pipeline Execution

- CI/CD Resources

- Credentials

- Repositories

- Execution Agents

- CI/CD Pipelines

CI/CD Platform & System

The standard SDLC starts from Designing and Architecture, through the multiple iterations of Coding, Continuous Integration and Continuous Deployment workflows, and allowing us to expedite change to the Runtime.

Observes focuses on the Continuous Integration and Deployment stages, which are rarely enabled by a single tool, or platform. Additionally, these stages of development inherently, and perhaps unexpectedly, touch runtime.

- CI/CD System refers to the combination of multiple CI/CD Platforms used to expedite change.

- CI/CD Platforms refer to the different products and offerings in the CI/CD ecosystem. These enable users to adopt CI/CD practices and include a series of components. Each component may have an independent RBAC and configuration to manage:

- Version Control Systems & Repositories

- Pipelines / workflows

- GitOps Platforms

- Binary Managers

- Execution Agents

CI/CD Pipelines

A pipeline is a structured set of instructions that, when followed correctly, produce a desired outcome. Pipelines help ensure instructions are executed consistently and reliably, with fewer surprises.

Pipeline (Definition)

Creating a pipeline definition is equivalent to creating a structure, a placeholder. This structural concept has properties, which give it its soul - properties such as the name and configuration file path.

A pipeline definition is not an execution of the pipeline, think of it as a box

Noteworthy attributes of a pipeline definition:

- The permissions it has to resources, which will dictate the access it has to credentials, repositories, execution agents

- The configuration file path, which will dictate the instructions to be executed when running the pipeline

Pipeline Execution

Associated with a single pipeline definition, we have multiple executions. A pipeline execution is the instantiation of said pipeline at a given time by an execution agent. The execution agent obtains the configuration file and accesses all the resources it requires to execute the instructions in the file.

A pipeline execution is an instantiation of the pipeline. The flow is determined by the pipeline configuration defined at the time of the trigger. Variables include the file path and the branch (pointer to a commit) specified.

Noteworthy attributes of a pipeline execution:

- The (configuration file path, branch) combination, which dictates the steps executed

- The resources the execution accessed (credentials, repositories, execution agents)

- The resources the execution could have accessed (credentials, repositories, execution agents)

- The trigger of the pipeline execution (was it automatically/manually triggered? who triggered it?)

Potential Pipeline Execution

Potential pipeline executions are all the combinations of (configuration file path, branch) that are imminent to become a pipeline execution - usually at a distance of a button click or CI trigger. If you think of a configuration file, stored in a git repository, that is a single version of that configuration file. However, you may have branches and tagged repository commits that represent different versions of that "same" pipeline configuration file path. The pipeline, at any time, could be executed with any of these combinations.

graph TD

A[Pipeline Configuration Repo] --> B[ Branch 1]

A --> C[Branch 2]

A --> D[Branch 3]

A --> E[Tagged Commit 4]

A --> F[Tagged Commit 5]

B --> G[Potential Pipeline Execution Branch 1]

C --> H[Potential Pipeline Execution Branch 2]

D --> I[Potential Pipeline Execution Branch 3]

E --> J[Potential Pipeline Execution Tagged Commit 4]

F --> K[Potential Pipeline Execution Tagged Commit 5]CI/CD Resources

Pipelines are really just instructions. What gives them their power are the resources they can access. Here we introduce the concept of CI/CD resources.

Why do they matter?

Without the resources around it, a pipeline is just a recipe in a closed recipe book - a process waiting to be executed. Ensuring the resources are accessible under the right conditions, at the time of the execution, is crucial.

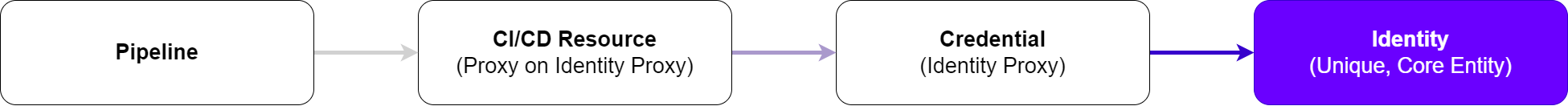

Credentials as Identity Proxies

Identities are unique and, by nature, cannot be stolen or shared. Credentials are our attempt to represent this uniqueness in the digital world. In this sense, credentials serve as an identity proxy - a way to turn a real-world identity into a usable digital format.

CI/CD resources can be seen as credentials. So, a privileged credential ends up being a privileged resource, which a pipeline could be permissioned to use in a pipeline execution. Therefore, privileged resource access should be controlled by access conditions.

ADO CI/CD Resources (like those used in a pipeline) often stand as a secondary representation of a credential. And, as we've seen, credentials are considered an identity proxy.

Azure DevOps Identity Proxy

In Azure DevOps, CI/CD Resources come in various forms. Ultimately, each type serves as a proxy for a credential, thus, an indirect link to an identity.

Example: Azure DevOps CI/CD Resources as "Proxies on Identity Proxies"

1. Agent Pools: Agents, where the CI/CD processes run, may have access to credentials (digital certificates, SSH keys, and other credential types). Access to an agent, a CI/CD Resource, means access to the credentials it has access to, resulting in access to the identities those credentials proxy. Authentication and authorisation to services is done based on those credentials.

Moreover, they represent access to a running environment, which can be self hosted or not.2. Service Connections: Service connections allow pipelines to interact with external services by using stored credentials (like tokens or API keys). Access to a service connection, a CI/CD Resource, means access to the credentials it stores, resulting in access to the identities those credentials proxy. Authentication and authorisation to services is done based on those credentials.

3. Variable Groups: Variable groups hold sensitive values (e.g., passwords, keys) that pipelines use without direct access to the raw credentials. Access to a variable group, a CI/CD Resource, means access to the credentials it stores, resulting in access to the identities those credentials proxy. Authentication and authorisation to services is done based on those credentials.

4. Secure Files: Secure files, such as certificates or keys, are referenced in pipelines without exposing their contents. Access to a secure file, a CI/CD Resource, means access to the credentials it stores, resulting in access to the identities those credentials proxy. Authentication and authorisation to services is done based on those credentials.

5. Repositories: Repositories are not as straightforward. One scenario to imagine is, if a GitOps process uses a repository as a source of truth, it means there is an external process monitoring the contents of that repository. That external process may have its own access to ensure the state between the repository and the runtime is synced. In this scenario, access to the repository could be seen, indirectly, as providing access to credentials used by the external processes.

6. Environments: "An environment is a grouping of resources where the resources themselves represent actual deployment targets" - Accessing the environment grants access to service connections and secure files that have been configured to access those targets.

Execution Agents

Often overlooked, execution agents are where the actions are performed. All credentials, code, artefacts flow through them. Having access to one of them means you potentially have access to any pipelines executed within it's scope, plus any resources used by such pipeline. Additionally, they can be used for lateral movement to other infrastructure, such as Cloud Service Providers, if you are using things such as managed identities. Can be used to compromise an artefact that ends up being deployed in the runtime.

| Access Vectors | Impact |

|---|---|

| Insider threat Compromised user account Malicious or vulnerable binary/package (supply chain) |

Data/secret exfiltration Denial of Service / Wallet Persistence / Lateral movement Compromise of internal artefacts Stop/tamper with security tooling |

If you do not prioritise their management, access to the build agent through any of the access vectors above can mean chaos!

Platform Management

With the platform management concepts, you'll be able to assess if your CI/CD Resources and Pipelines are privileged, shared, protected and segregated as expected, and take actions accordingly.

Logic Containers

There may be hundreds of resources to manage. Logic Containers help group resources for easier management.

How should you split your environment?

How you should split your environment depends on the level of granularity that suits. From the most basic split between Production and Non-Production, all the way to having Environment x Purpose x Team. A few options are presented below. You can also mix and match.- Simple split. Example:

devprod- Good for small teams or early stage

- Split by environment and function. Example:

dev-appdev-infraprod-appprod-infra

- More granular, supports separation of concerns

- Split by environment, function, and team. Example:

dev-app-teamAdev-infra-teamAprod-app-teamAprod-infra-teamA... team B's- Even more isolation and control

The default, basic, Logic Containers are a straightforward way to split the resources into one of two boxes: "stuff to care about" and "stuff not to care about". It is hard to cater for every team's development environments. Create more Logic Containers to support your operations.

Correctly classifying and allocating CI/CD resources is crucial because it will inform how you'll manage each resource. At the moment allocation of resources into Logic Containers is manual, but stay tuned for automatic allocation.

What Matters?

Ideas!

- A service connection with access to production environments

- A service connections with access to trusted registries and repositories

- A variable group with access to production-grade secrets

- A secure file that allows you to SSH into a production cluster

- A repository that is monitored by a GitOps workflow

- An agent pool that runs pipelines from a restricted project

Resource Lifecycle

Ensuring CI/CD Resources have a well-defined onboarding process is one of the keys for success. A good resource lifecycle management process looks like:

- When a new CI/CD Resource is discovered (or as it is being onboarded), additional metadata about what identity it ties to needs to be given and verifiable

- According to its access level and attributes, it should be allocated to the right Logic Container

- According to the resource criticality, the appropriate approvals and checks should be applied (this can be informed by the Logic Container)

- Continuous evaluation of the resource access level and attributes

Note: Allocating the right Logic Container and continuous resource access level evaluation is a manual process today. Stay tuned for automatic allocation and evaluation.

Policy Management

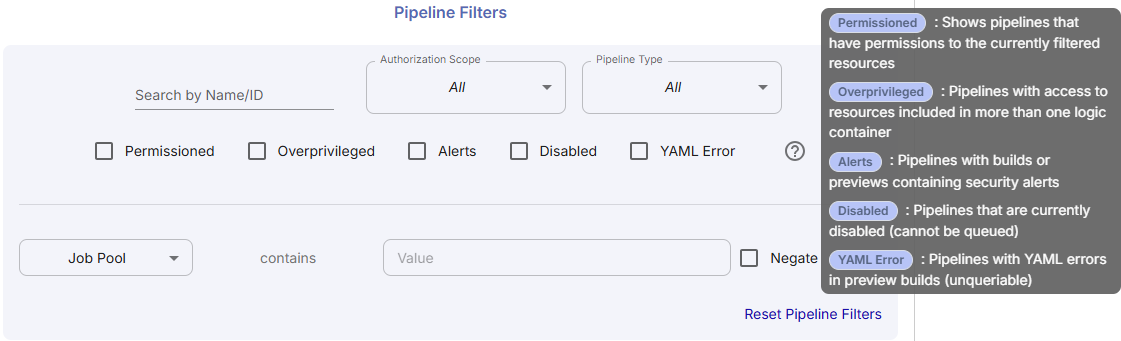

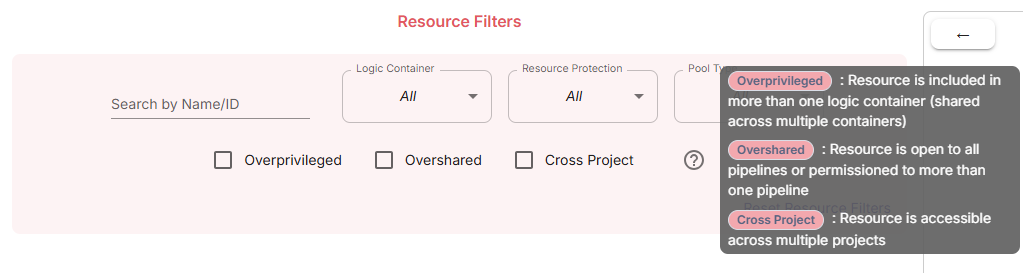

Policy unites the discovery of CI/CD Platform resources and pipelines with the Platform Management concepts to enable you to set policy on what good looks like. You will see there are some (policy) filters more resource-focused and others more pipeline-focused. You can combine the two.

Base Policy Set

Policy runs the world. By default, the following scenarios are highlighted:

- Is a resource accessible by the expected pipelines? Is the resource overshared?

- Is a resource accessing the expected Logic Containers? Is the resource overprivileged?

- Is a resource protected by access conditions? Is the resource protected?

- Is a resource cross-project? Does the resource exist in more than one project? Is the resource shared across projects?

- Is a pipeline permissioned into resources from different Logic Containers? is the pipeline overpermissioned?

- Is a pipeline accessing resources from other projects? Cross-project resource access?

Filters for this base policy set are available in the Resource & Pipeline Tracker

Pipeline Policy Filters

Resource Policy Filters